Analyzing Mobility Hotspots with MovingPandas & CARTO

Mobility data is a cornerstone of geospatial analysis for so many industries. Being able to gain insights from movement and behavior data is key to decision making - from adapting mobile data coverage to meet event-based demand to assessing the insurance risk profile of drivers living in different areas.

However, traditionally, mobility data has posed a challenge for geospatial analysts. Data is often fragmented and proprietary, and by its very nature is enormous in size - making it difficult to analyze with traditional GIS systems.

By adopting a cloud-native approach - with data hosted and processed in a cloud data warehouse - organizations are increasingly able to leverage mobility data to make more informed decisions at scale.

In this blog post, we’ll dive into a tutorial on one particular element of mobility data; trajectories. This data not only tells us where numbers of people have been, but where they are coming from and going to - obviously incredibly useful for predictive analytics!

In this tutorial, we’ll be showcasing how you can work with MovingPandas—a Python library for handling movement data—and CARTO’s cloud-native geospatial analytics platform to turn raw mobility data into a space-time hotspot analysis.

You’ll also need access to a CARTO account. If you don’t have one already, you can sign up to a free 14-day trial here.

To prepare the data for the hotspot analysis, we’ll convert the polyline string into a list of coordinate pairs and remove records with missing data. Additionally, we’ll calculate timestamps for each trajectory fix based on the Unix timestamp of the trip start and a 15-second sampling interval.

Run the below code to do this.

You can see a snapshot of the output of this below.

The next step is to calculate timestamps for each trajectory fix based on the unix timestamp of the trip start with a sampling interval of every 15 seconds, which we can do with the following code:

Again, you can see a snapshot of the output of this below.

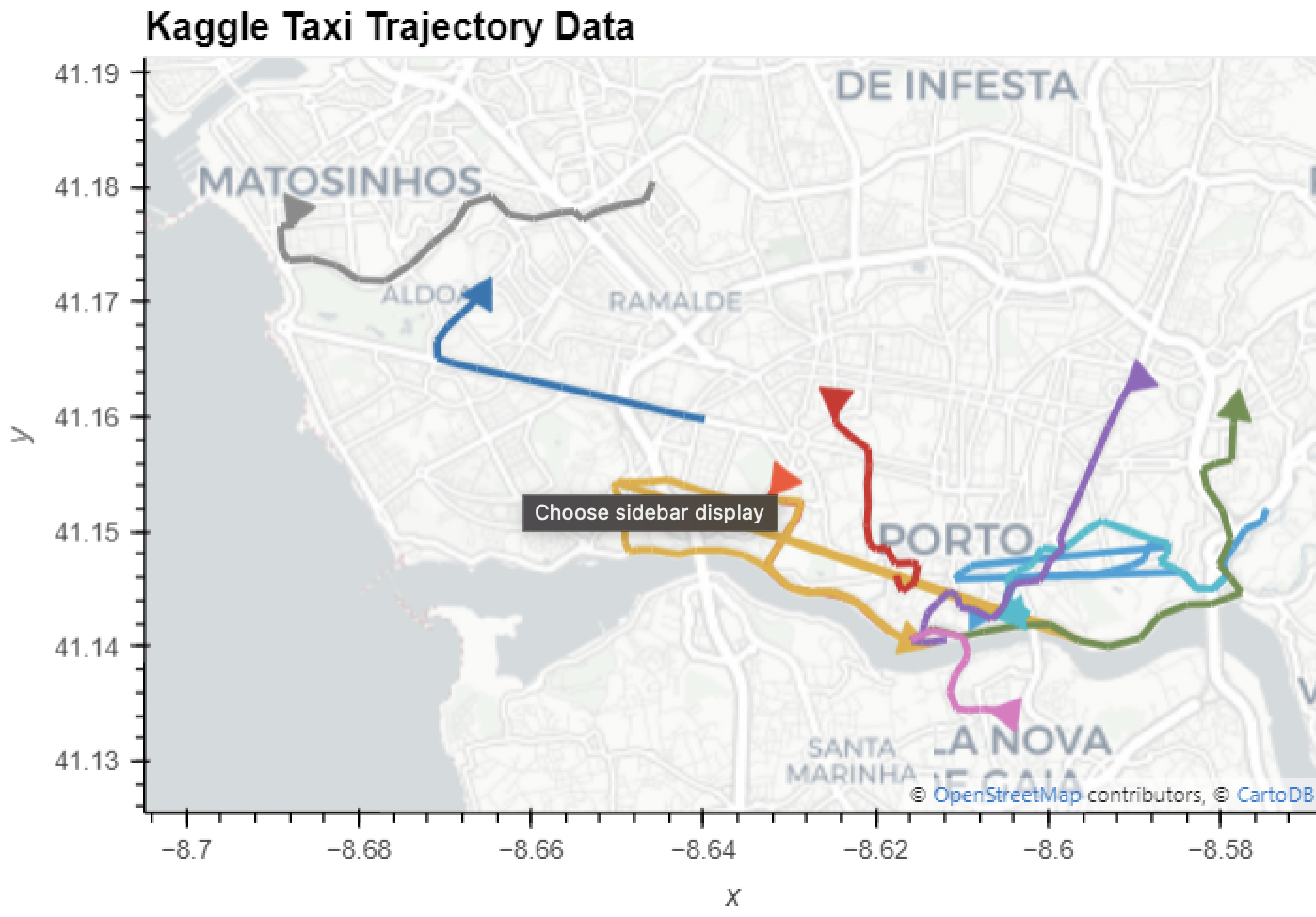

Shall we see what that looks like on a map?

Now we have a series of time stamped coordinates for each trip, we can transform them into trajectories.

With the data preprocessed, it's time to turn the taxi data into trajectories with MovingPandas. This step is crucial for gaining insights into the taxi movement patterns.

We can use the code below to do this.

The results of this can be seen below:

Notice anything strange? We can see some of the trajectories seem to be taking strange routes, particularly those coloured orange and blue in Central Porto. These are caused by location errors in the data and need to be cleaned.

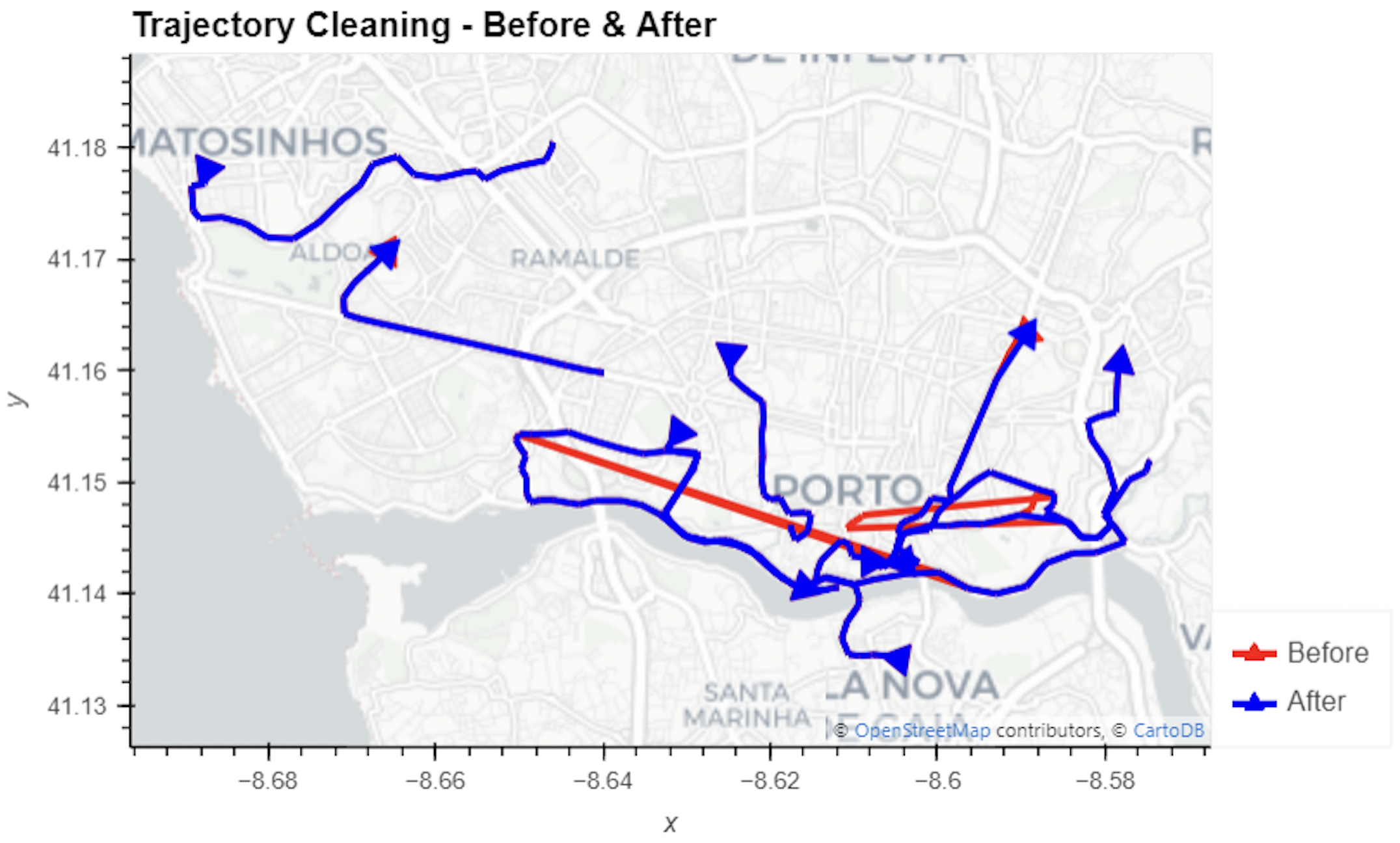

We can identify outliers in the data by examining travel speeds between consecutive trajectory fixes. Unrealistically high speed values (over 100 m/s) are caused by large jumps in the GPS trajectories. To clean these trajectories, we use MovingPandas' OutlierCleaner.

We can visualize the before-and-after trajectories to see the impact of cleaning.

Let’s take a look at the difference below. The red lines are the original trajectories, whilst the blue are the results of our outlier cleaning - much more sensible!

Now we have data that we’re confident in, we can start to generate some insights.

A really useful technique for understanding the patterns in mobility data is to calculate Space-time hotspots, where you’ll understand statistically significant clusters in your data in both space and time. But, before we do that, we need to convert our data to a Spatial Index called H3.

Spatial Indexes are global discrete grid (DGG) systems with multiple resolutions available. They’re incredibly useful as a support geography, as their grid cells are geolocated by a short reference ID, rather than a long geometry description. This makes them lightweight to store and super quick to process - ideal for working with typically heavy data like mobility data. If you’d like to learn more about Spatial Indexes, head here to download a FREE copy of our ebook - Spatial Indexes 101!

H3 is one type of Spatial Index, popular for its hexagonal shape making it ideal for measuring and representing spatial trends; learn more about the power of hexagons for spatial analysis here.

Transforming your data to a H3 index couldn’t be easier - you can find a range of tools from the Analytics Toolbox and in Workflows, from simple geometry converters to enrichment functions which allows you to aggregate numerical variables.

As we’re working with trajectory data - however - we need to do something a little different; we’ll be clipping the trajectory data to H3 polygons.

The first step in this process is to first create the geometries which represent the H3 polygons in our study area using the code below.

Next, we can use the code below to clip the trajectories to the newly created H3 polygons.

Finally, in order to calculate Space-Time Hotspots we need to split the trajectories into hourly segments using MovingPandas' TemporalSplitter. The first code block below achieves this, whilst the second extracts the “end” hour for each trajectory.

We also extract the hour when each trajectory segment ends.

With both the start and end times calculated, we can extract the travel duration for each H3 cell using the code below - the final piece of the puzzle we need to run Space-Time hotspots!

Finally, load the data into your cloud data warehouse - and let’s analyze!

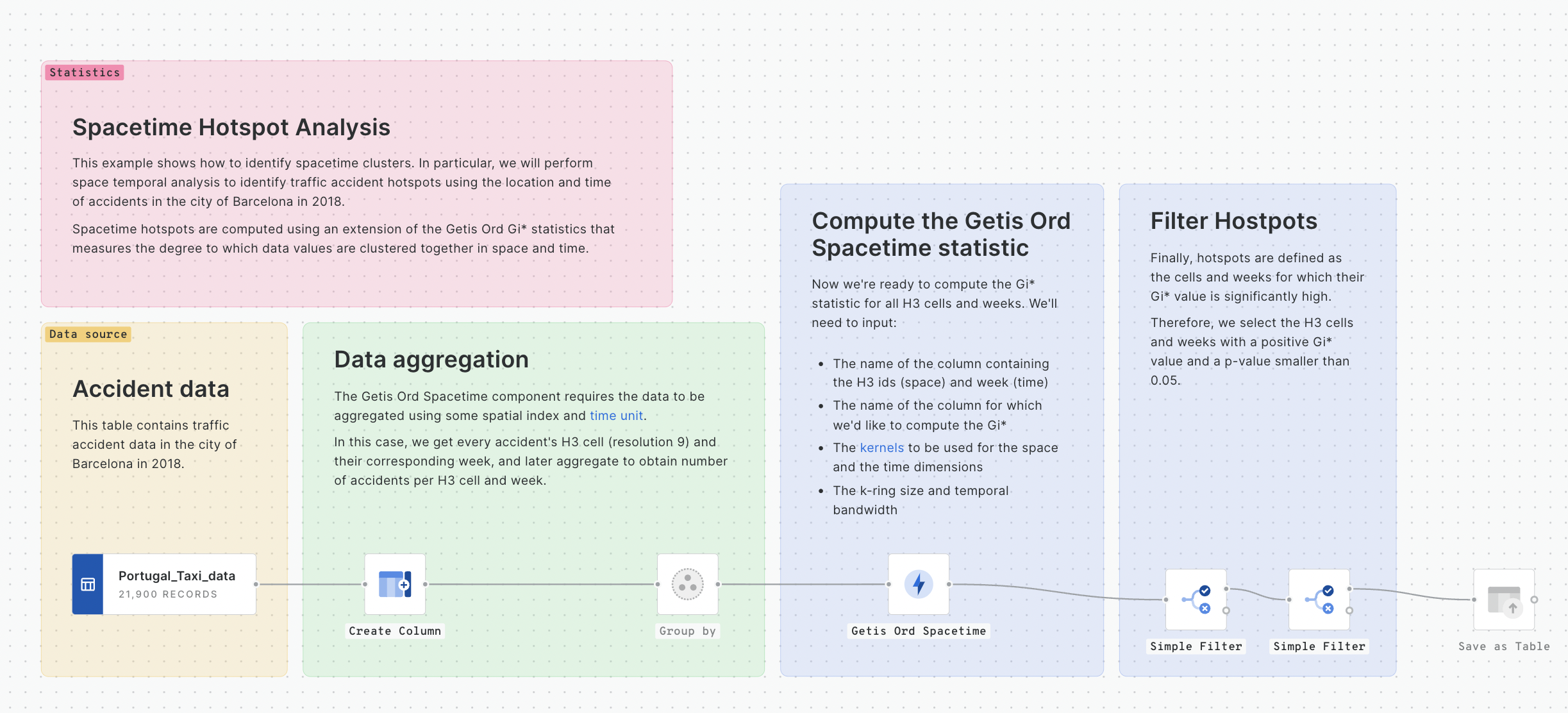

Now we’re ready to perform Space-time hotspot analysis to enable us to identify and measure the strength of spatial-temporal patterns in our mobility data. We’ll be doing this with CARTO Workflows - a low-code, drag-and-drop interface for building analytical pipelines. The component we’ll be using to run this analysis is Getis-Ord* Space-Time; you can learn more about this statistic as well as other spatial hotspot techniques here. You can see this in action in the workflow below, which you can download as a template here.

This statistic can also be run using the below SQL code; for more details please refer to our documentation here.

In this tutorial, we will use a neighborhood size of 1 and a kernel function of uniform for both spatial and temporal analysis. Shall we check out the results?

Explore the map in full screen here. We’ve used a SQL Parameter here to allow the end user to filter the hotspots to the date and time period they’re interested in.

With the results of this analysis, taxi companies can make more intelligent decisions for optimizing resources around spatio-temporal demand.

Combining MovingPandas and CARTO opens up exciting possibilities for analyzing complex and large-scale trajectory data. This approach can help you gain valuable insights and drive decision-making for any organization dependent on knowing not just patterns in space, but also time.. By following this tutorial, you can adapt these techniques to a wide range of mobility datasets and use them to make informed decisions.

Want to learn more about building more scalable spatial data science pipelines? Get in touch for a free demo with one of our experts!

This tutorial was developed as part of the EMERALDS project, funded by the Horizon Europe R&I program under GA No. 101093051.

.jpg)

.png)