Z Score Visualization: How to Identify Hotspots with BigQuery

Most Data Scientists and Analysts understand that visualizing datasets can be a crucial way for users to engage with data. Knowing where median household income is across a location can drive the user to find conclusive answers. But business analysts aren’t just focusing on one variable at a time they are looking for multiple ways to measure and visualize statistics quickly and easily.

Index scores (or Z scores) have often been used in geospatial analytics to measure and rank how one location is compared to its peers. Local governments and municipalities have been able to tap into index scoring to take the viewer to some actionable insights such as the pavement condition index and equity index scoring. Healthcare officials are identifying areas of highest risk based on combining and weighting many variables to determine vulnerability in a given population. Additionally the retail industry is using index scoring for deeper insights into customer segmentation or spending habits. Each industry has their own advantages for using index scores and this article will show how Data Scientists and Analysts can create these scores for their analytical use cases.

Before jumping into visualizing index scores here is a brief overview of my methodology in calculating an index score.

Step 1. Standardizing Features

Data variables are often presented to us through another type of statistical aggregation. Medians averages totals percentages etc. can present some complications if we were to combine two different statistics into one score. While many arguments can be made for normalization versus standardization this article will dive deeper into standardizing.

A z-score allows us to understand how many standard deviations above or below the mean for a given data point.

The calculation itself is fairly straightforward. Here is a simple z-score:

z = (x-mean)/std.

Breaking this down further:

- ‘x’ is the particular data point you are calculating the z-score for

- ‘mean’ is the mean of all the observations in the dataset

- ‘std’ is the standard deviation for all the observations in the dataset

Calculating a z-score is one option you can use to scale your variables in machine learning.

For Python users Scikit-learn’s standard scaler is a z-score calculation as well as pyts standard scaler.

BigQuery’s ML standard scaler is taking this to a whole new level especially if you’re working with big data (this will be much faster than doing it in a pandas dataframe). This article will focus mostly on using BigQuery.

Standard Scaling - what is it?

Scale — To change the scale of a dataset means changing the range of values of the dataset. For example it’s possible to scale a range of ages [21–75] down to a range of [0–1]. Generally changing the scale (or scaling) won’t change the shape of the data’s distribution.

Standardize — Standardizing generally means changing the data’s values so that the standard deviation of the data = 1 (called unit variance). This often goes hand-in-hand with removing the distribution’s mean (setting mean = 0). This tends to shift the shape of the data towards the shape of a normal distribution. Data is often scaled implicitly when standardized.

Each function can be very straightforward:

Python Example:

BigQuery’s SQL Function:

Step 2. Weighting

Weighting can be an optional choice but for many business users this can be a critical component. Weighting symbolizes the importance of a feature. In its simplest form weighting is multiplying your variable by a numerical value.

While each user has their own secret sauce for a unique weighted index score I’ve found that multiplying from 0.0 to 1 can be highly effective.

For example if your variable is ½ as important I would multiply that number by 0.5

Step 3. Capping

I’ve found that a lot of Data Scientists have many ways to identify and remove outliers. But as a geospatial analyst I don’t want to consider those outliers as wrong-doers. I want to keep and visualize my outliers but do this without confusing the viewer.

{% include icons/icon-quotes.svg %} In most large data sets 99% of values have a Z-score between -3 and 3 meaning they lie within three standard deviations above or below the mean.

The Investopedia Team

I have learned to cap my z-score values from -3 to 3 which gives enough room for variance and also reduces the amount of huge outliers that could skew the overall index score.

For Python I had used a simple if else statement.

For BigQuery I had used a simple case when calculation

Step 4. Compiling Your Variables

Now that each variable in our table has been standardized weighted and capped we can now combine each variable into one score.

For those of you who only analyzed one variable - you can skip to the next step.

Creating the composite score is quite simple. Create a z-score adding your individual variable’s scores.

For BigQuery

Step 5. Yielding a Final Index Score That Will Make Sense to Your Viewers

While there are many ways to present your final score I have found that business users need to understand a digestible way to interpret the results.

If you had capped the datasets correctly this final index score will yield a result of 0-200.Following

z = (1+ (sum_score/max of sum_score)) * 100

Comparing this to our original z-score we are looking at 1 standard deviation away from the composite score / max.

We then multiplied this by 100 to give us a final percentage.

A score of 100 = Average. Any score above 100 is above average while a score below 100 is considered below average.

Example:

- A score of 146 = 46% above average

- A score of 46 = 54% below average

BigQuery Example

Advanced | Heat Maps Through Index Scoring

Often heat maps and index scores are visualized through geographical boundaries such as postal codes or block groups. But this might not provide enough understanding of real-world boundaries. At times one postal code can encompass a barrier such as a river or highway separating two distinct geographies but only visualized by one single boundary.

Geospatial analytics should be unique to real-world geographies.

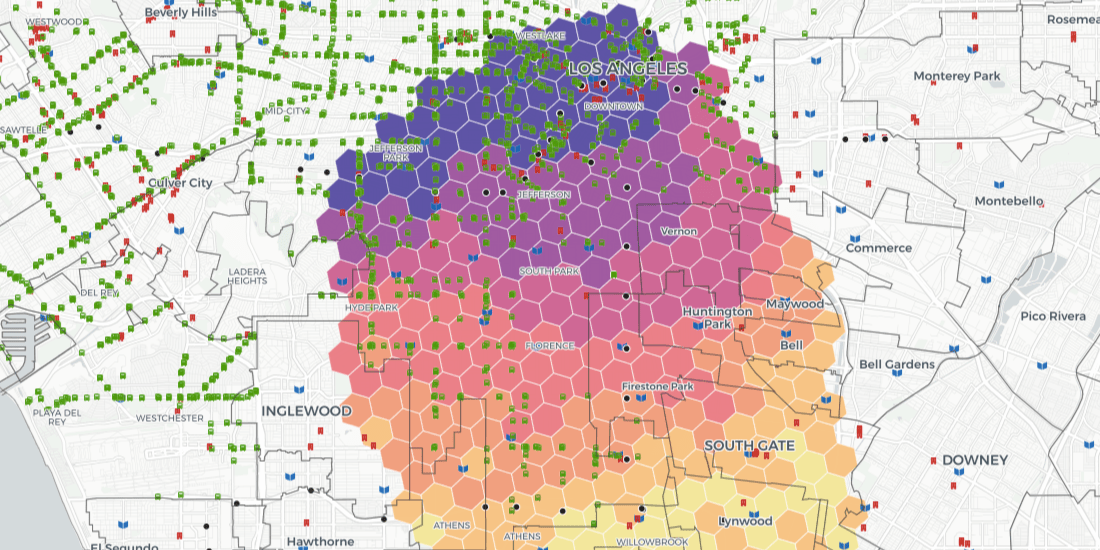

In the Python example below ten-minute driving distances were used to gather variables and score. The end visualization leverages H3 cells (tesselations or hexagons). Each cell represents a ten-minute driving distance from the cells’ centroid.

This can be accomplished quite easily through these easy steps:

- Create H3 cells within your area of interest

- Within each cell maintain or create a unique identifier to join back to

- Generate centroids off of the cells

- From each centroid create driving distances polygons

- Maintaining the same unique identifier as seen in the H3 cells

- Enrich interpolate or spatial join your variables to the drive-time polygons

- Score and rank through indexing

- Join or merge the scores to the original H3 cells

Follow along in this Python Example: Accessibility to Healthcare within a 10-Minute Driving Distance.

Using CARTOframes I was able to easily create H3 cells access the Data Services API to generate isolines (driving distances) and quickly visualize and share my results straight from the colab notebook. (Users will need a CARTO account to access the isoline functions within the Data Services API).

One of my favorite components of using CARTOframes is the ability to visualize my work in an interactive map complete with widgets that can filter. I can share these preliminary results with my business users for initial feedback.

I was also able to access the Enrichment functions to provide demographic information from the Data Observatory for business users to access the map themsleves for exploration.

Users were looking to see how areas of below average household income ranked. The US Bureau lists that the average household income for Los Angeles is $68K annually. By filtering down the areas that are below the average I was able to fine-tune and explore how those areas ranked:

Combining BigQuery and CARTOframes in this way allows Data Scientists and Analysts to simplify the method for measuring and ranking how one location compares to another. As has been shown this can be achieved by first enabling the calculation of Z scores and then following up with the ability to visually share these results to other users within the business for rapid feedback.

Want to get started? Sign up for a free account

.png)

.jpg)